Putting a Face to Finance

The Challenge

Financial institutions are always looking for ways to utilize cutting-edge technology to engage customers, deliver value, and showcase their technological expertise. With little knowledge of the investment world, I wanted to equip users with knowledge about how to nurture their financial standings in a way that works for them.

The Solution

I utilized Amazon’s Facial Emotion Recognition technology to create an interactive experience that detects and analyzes user reactions to stimuli, and aligns them with corresponding personality types and investment strategies.

Process

Understand

Research

Sketch

Design

Implement

Evaluate

Understand & Research

One of the keys to understanding the problem I was tackling was to bring in a financial expert who could speak to the experiences of the people I was designing for. I set up multiple interview sessions with a fin-tech expert so I could understand the landscape and identify the problems of everyday users.

Ideation

After spending time analyzing the findings and discussion with the fin-tech expert, I began to narrow in on a specific pain point that users had; lacking fundamental knowledge about how to invest, coupled with the barrier to entry for financial expertise.

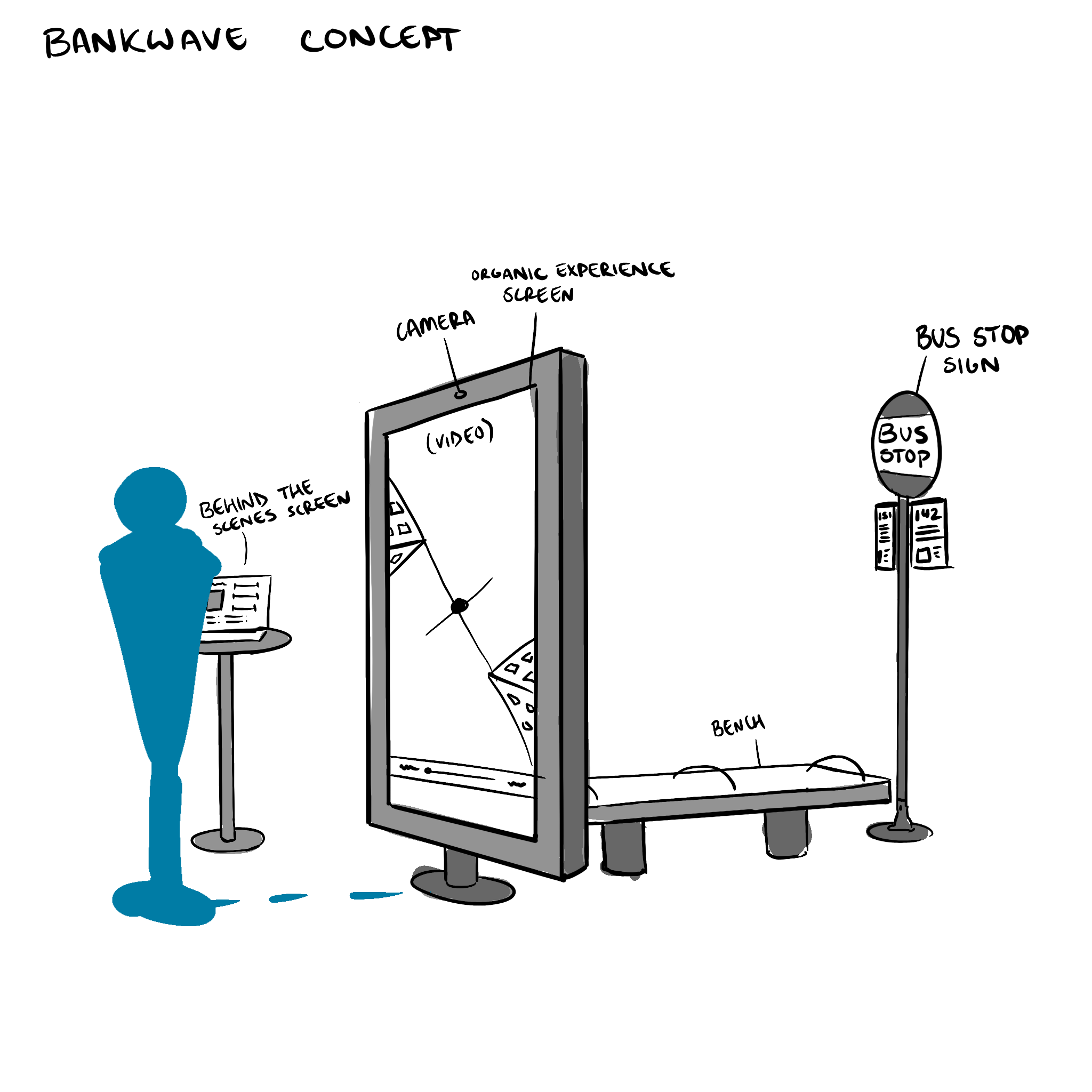

I explored new and emerging technologies that could be possible solutions, and sketched out visuals for what those experiences would be like. This helped to communicate the essence of the concept before spending any time on wireframes, prototypes, etc.

After bringing the ideas in front of a panel of users, the clear contender was a facial detection implementation, where a video camera would be used to capture emotions, analyze reactions to various video stimuli, and recommend financial advice based on those reactions.

Storyboarding

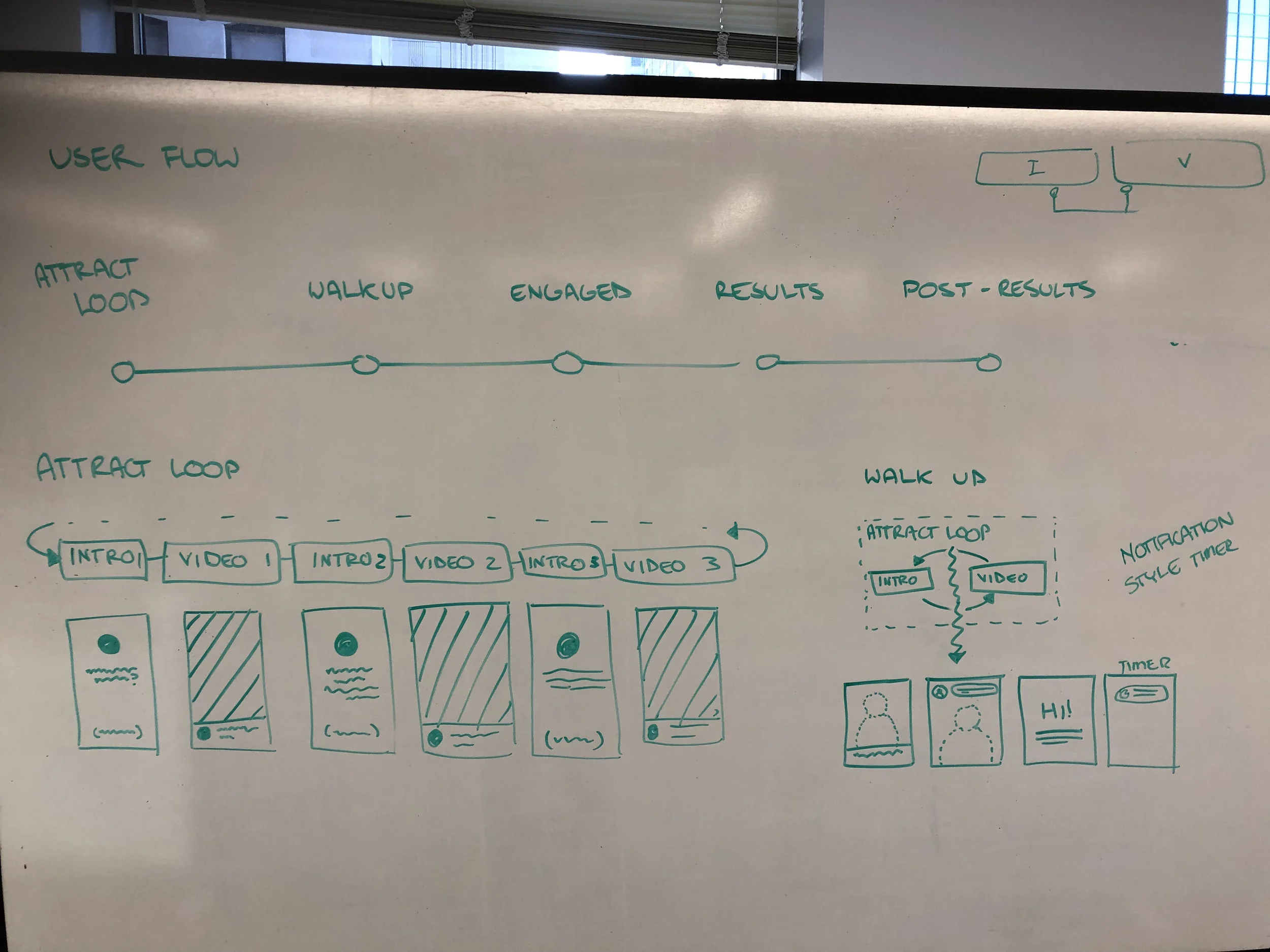

With the clear direction of the concept, I next wanted to define what that flow would look like. What do we have to tell the user to prompt them about what we’re doing? How would we communicate the complexity of financial investing? I was able to grasp the breadth of the scope of this experience, and it allowed for some rapid iteration and user testing. I utilized Adobe XD and their rapid animated prototyping capabilities to create animated storyboards for the phases of the experience.

User Testing

At this point, I had a rough prototype that gave a feel for the experience. I was able to utilize and iPad to simulate the larger screen, while delivering the same emotional experience. These tests taught me:

The experience needed an idle loop to be a true passerby experience.

The setup to the experience was confusing to users, and needed to be communicated more effectively.

I needed to deliver immediate value out of the experience, rather than solely refer them to a financial advisor.

Second Iteration

Inspired by the insights from user testing, I designed a second prototype, with a clearer understanding of the distinct phases of the experience.

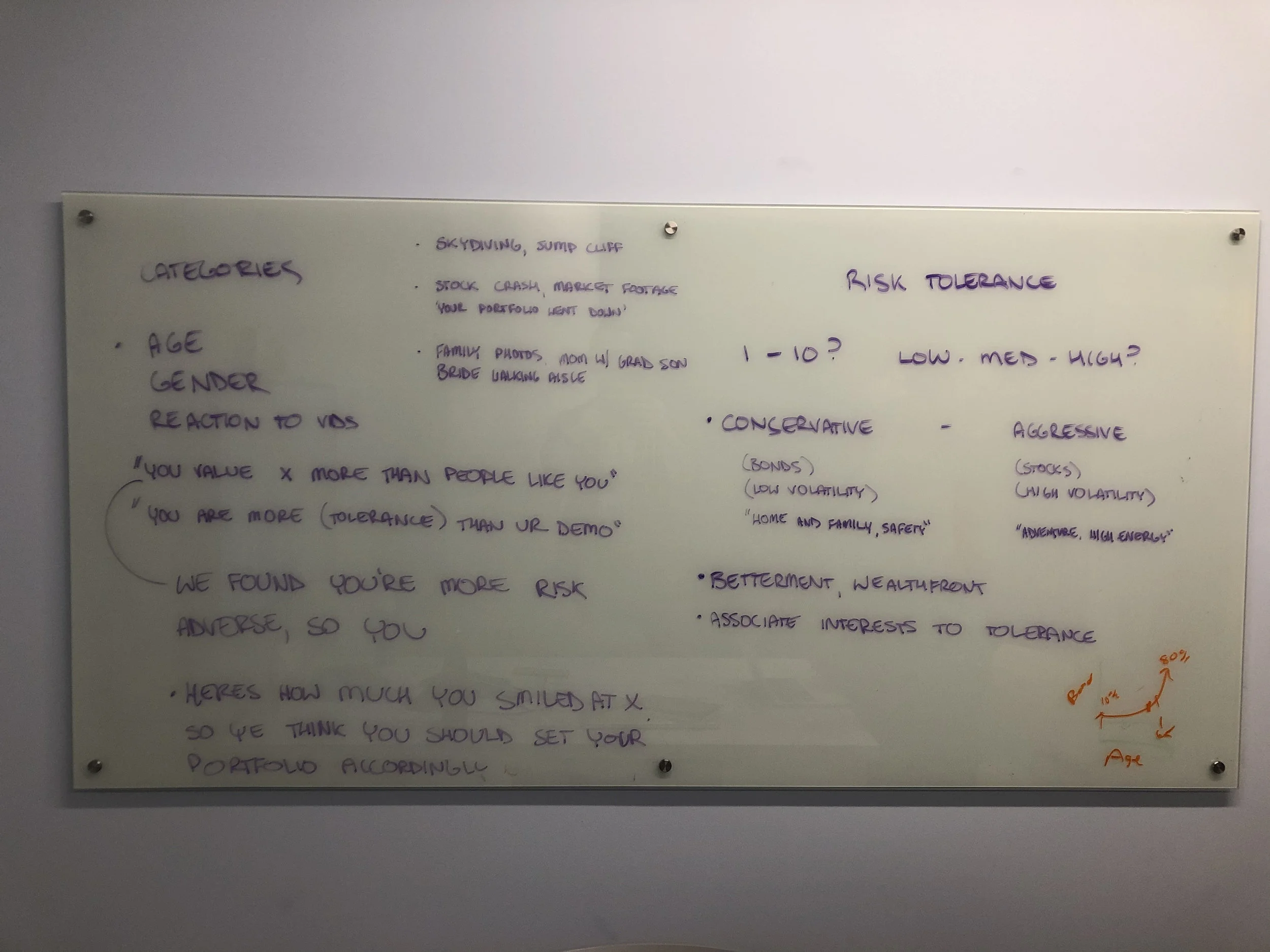

Risk Matrix

To solve the problem of delivering immediate user value, I developed a matrix for the varying risk categories. I worked with the fin-tech expert to flesh out the matrix based on the data points we were able to detect, and crossed those with the reactions to the video stimuli.

This gave users customized financial advice that was tailored to them, and delivered specific knowledge about financial investing options.

Bringing The Fun

Something I realized during the user testing was the lack of interest. Users felt the experience was bland and uninteresting, which contrasted the fascinating technology we were working with. Delivering a giant chunk of text with the investment suggestions was not an effective way to deliver insights.

To tackle this, I created various personalities that the investment advice could fit into. Users would be aligned with a risk personality, and be given fun stocks to invest in, such as cheese futures, unicorn horns, and space crops.

RISK PERSONALITIES

User Testing

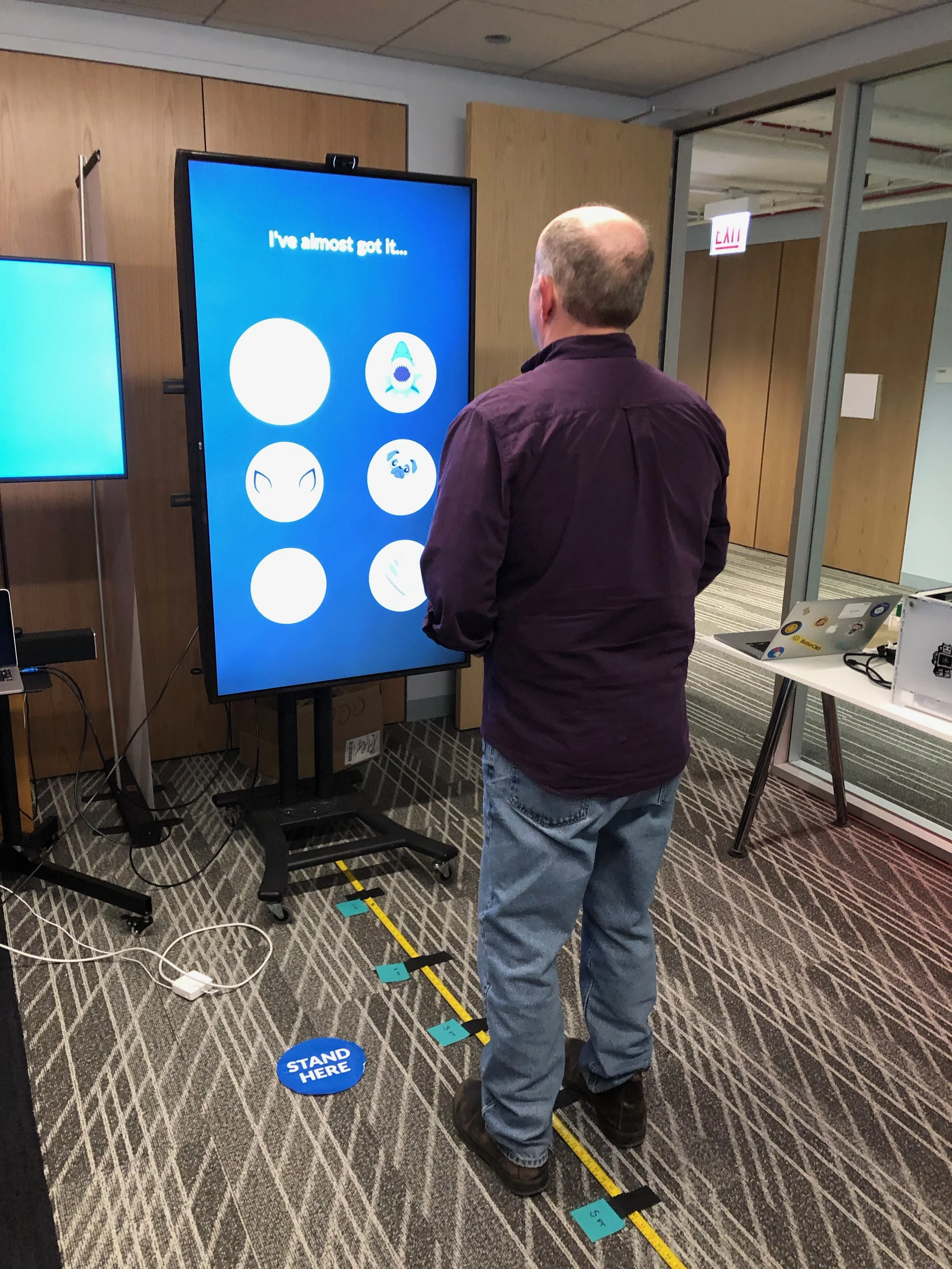

The changes I made to the demo were significant enough, and I needed to test them and get feedback from people as to if the personalities were working, with the new and improved personality matrix.

Discoveries

The Simplex Fix

I also needed to test the limits of the hardware we were working with. One of the challenges that I discovered through testing was the distance at which the facial recognition tech was successful at analyzing emotions. Rigorous testing with various skin tones, genders, features, and ages allowed me to hone in on the ideal distance from the camera to effectively capture facial emotions.

Personalization

Amazon’s facial analysis gave us a breadth of information that I was able to utilize to capture the engagement of the user. While features like glasses or having a beard didn’t affect the financial investment recommendation, it added a massive moment of delight.

I was able to integrate these recognized features with the results matrix, and when throwing it in front of users, there was a resounding excitement with the feature. Users were able to recall their risk personality more often, and shared their anecdotes of the experience with others, urging them to demo the experience so they could compare their results.

Forrester Conference

For the 2019 Forrester Conference, I debuted our technology, giving demos and talking with industry experts and C-suite executives on the implementation of our innovative technology. A number of leaders from top companies wanted to purchase the experience outright, and this sparked conversations for consulting engagements with Solstice.

Conference Retrospective

After I ran a retro for the Forrester conference to gain some feedback from the attendees and event staff, I worked to amplify the experience. From the Forrester showcase, I learned:

The lighting needed to be controlled so the camera picked up enough detail in the facial recognition.

The experience needed to be more cohesive in a physical space than a single tv screen and banner.

New York Showcase

I designed a full cabinet and worked with an event production company to develop solutions that would solve the lighting and cohesion problems. The completed experience ended up being two cabinets that could run two instances of the same experience, allowing onlookers to contrast and compare the experience, as well as account for traffic of attendees who wanted to demo the tech themselves.